How to Use OpenAI Assistants (API)

Explore various approaches to using OpenAI Assistants with this guide. Use this article to learn about the different ways to utilize assistants through the API.

Start

In November 2023, OpenAI launched the API for Assistants. This is a bot(more than a bot) function hosted by OpenAI, where we only need to provide a prompt and specify the tools we expect. Then, this bot can intelligently use these tools to answer, similar to an “Agent” based on the prompt.

You can try this article first to experience the “Agent” concept:

Assistant is not just for chatting

Actually, when it comes to Assistants, I don’t want to make a clear distinction between it and Chat or Completions. After all, one of OpenAI’s main functions is to derive tokens using LLM, and Assistants API can do what Chat or Completions can, plus it has an Agent added on.

Assistants can be reused

Creating an Assistant can be done through LangChain, OpenAI SDK, or REST, and then it can be stored in the OpenAI “cloud” for long-term use, simply call retrieve to reuse it in other applications.

In addition, it can be created “manually” in the “cloud,” but that’s not the focus of this article.

Content

We will start with “simple to complex”, using the assistant function from the simplest perspective, and then point out some key parts:

- Using LangChain to call

- Using OpenAI Python SDK

- Using REST API

This will enable you to be aware of the “operation” behind “simplicity” when using the “simple method” in the future.

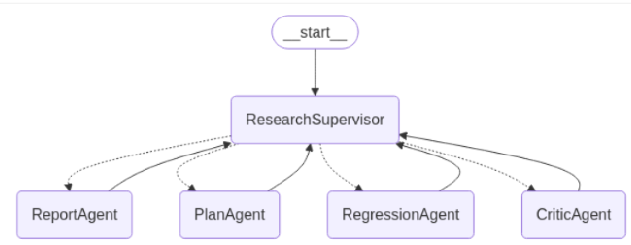

Assistants architecture

Basically, it is recommended to visit the official website to understand the concept. Here, I will briefly summarize:

Instantiate an Assistant object, establish a session Thread object as the context between the user and the Assistant, prompt a Message for what to do, and start the Run. The Run maintains a lifecycle for the certain Thread that is interactive between the user and the Assistant.

More details:

https://platform.openai.com/docs/assistants/how-it-works/objects

Tool and Run

The Assistant will select and use the appropriate Tool based on its understanding of the prompt, which is very similar to the “Agent” in LangChain.

Tool list (always updating)

https://platform.openai.com/docs/assistants/tools/tools-beta

LangChain

As the first example LangChain, we’ll choose to create a code generation assistant. The result is a JSON, including “code”, “language name”, and “explanation”. The Tool to use is code_interpreter. As the name suggests, this Tool is our ideal. For specific details, please refer to the official website.

Notice: The assistant support in LangChain is no longer experimental; its implementation has become standard.

Currently, LangChain only supports creating an assistant, and the retrieve API is still unavailable. This means that every time we create an assistant, the new one will be added to the OpenAI cloud. To reuse it, we must call SDK or REST.

Using LangChain to call the Assistants API is very straightforward, here is the code:

from langchain.agents.openai_assistant.base import OpenAIAssistantRunnable, OutputType

from langchain.chat_models import ChatOpenAIinputs: It is the prompt, telling AI what we are going to do.

instructions: It's a 'system' message.

tools: A tool list, ie: retrieval, code_interpreter or list of functions

model_name: ie: gpt-4–1106-preview

StructuredOutputParser: Structured output to JSON allows for retrieval based on a dictionary.

def _do_in_langchain(

self, inputs, instructions, tools, model_name

) -> tuple[OutputType, StructuredOutputParser]:

structured_output_parser = StructuredOutputParser.from_response_schemas(

[

ResponseSchema(

name="code",

description="""A code snippet block, programming language name and explaination:

{ "block": string // the code block, "lang": string // programming langauge name, "explain": string // explaination of the code}

""",

type="string",

),

]

)

assis = OpenAIAssistantRunnable.create_assistant(

"Assistant Bot",

instructions=instructions

+ "\n\n"

+ f"The format instructions is {structured_output_parser.get_format_instructions()}",

tools=tools,

model=model_name,

)

outputs = assis.invoke({"content": inputs})

logger.debug(outputs)

return outputs, structured_output_parser

def _langchain_outputs(self, outputs, structured_output_parser):

struct_output = structured_output_parser.parse(

outputs[-1].content[-1].text.value

)

block = struct_output["code"]["block"]

lang = struct_output["code"]["lang"]

explain = struct_output["code"]["explain"]

st.write(f"> Code in {lang}")

st.code(block, language=lang, line_numbers=True)

st.write(explain)

# main #

model_name = "gpt-4-1106-preview"

inputs = st.text_input(

"Prompt(Press Enter)", value="Find odd numbers from 1 to 100"

)

instructions = """As a software developer, your task is to devise solutions to the presented problems and provide implementable code. In cases where the programming language isn't explicitly mentioned, please default to using Python.

Notice: Only returns code and associated comments in certain programming languages.

"""

tools = [{"type": "code_interpreter"}]

if inputs != None and inputs != "":

outputs, structured_output_parser = self._do_in_langchain(

inputs, instructions, tools, model_name

)

if outputs != None:

self._langchain_outputs(outputs, structured_output_parser)OpenAI Python SDK

In the second example we’ll utilize OpenAI’s SDK to perform a tool retrieval on documents(a very simple RAG application). In this example, the AI will utilize all the files we have stored in the cloud and leverage them to answer questions.

When you open the OpenAI backend in the cloud, you can see that the files uploaded for a certain Assistant are visible.

💰Using the Assistants API has the benefit of not requiring us to split the documents, manipulate embeddings, or access vector databases. OpenAPI handles these tasks for us seamlessly.

Compare with this

OpenAI’s SDK provides the APIs to manage uploading files and retrieve files. In this example we’ll create the objects exactly based on the diagram we shown at the begin of the artical to create:

- An Assistant is created.

- A Thread which is the context (Thread is essentially container for AI assistant conversations) between the assistant and user.

You’ll find that the create of a Thread doesn’t need the assistant-id. - A Message is to enter the context (prompt for AI to respond), initially query or request and forwarded to the assistant to begin the conversation.

- The process message consumption is dominated by a Run, which is governed by the following “status” categories: “queued”, “in_progress”, “requires_action”, “cancelling”, “cancelled”, “failed”, “completed”, and “expired”. Using retrieve API can visit the latest status.

- When the status of the Run is “completed,” the assistant’s response can be obtained and process ends.

Notice: A Run can only commence after initiating a Message. Use retrieve method to get the updated status of the Run.

Here is the code, only different of the entry point is that we’ll init OpenAI client object:

openai: An instance of the OpenAI API.

inputs: It is the prompt, telling AI what we are going to do.

instructions: It’s a ‘system’ message.

tools: A tool list, ie: retrieval, code_interpreter or list of functions

model_name: ie: gpt-4–1106-preview

Here only a string will be returned as result.from openai import OpenAI

from openai.types import FileObject

openai = OpenAI(api_key=openai_api_key)def _do_with_sdk(

self,

openai: OpenAI,

inputs: str,

instructions: str,

tools: list[dict[str, str]],

model_name: str,

) -> str | None:

uploaded_files = openai.files.list()

uploaded_files_data = uploaded_files.data

uploaded_fileids = list(map(lambda x: x.id, uploaded_files_data))

logger.debug(uploaded_fileids)

assis = openai.beta.assistants.create(

name="Knowledge Assistant",

instructions=instructions,

model=model_name,

tools=tools,

file_ids=uploaded_fileids,

)

thread = openai.beta.threads.create()

openai.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content=inputs,

)

run = openai.beta.threads.runs.create(

thread_id=thread.id, assistant_id=assis.id

)

while True:

retrieved_run = openai.beta.threads.runs.retrieve(

thread_id=thread.id, run_id=run.id

)

logger.debug(retrieved_run)

if retrieved_run.status == "completed":

break

thread_messages = openai.beta.threads.messages.list(thread.id)

logger.debug(thread_messages.data)

return thread_messages.data[0].content[0].text.valueREST API

In the last example we’ll demonstrate using REST to show how to use the Tool broadly for Function. It’s important to understand that OpenAI acts as an “Agent” or “route” to schedule the execution of the function, rather than directly executing it.

Broadly speaking, Tool and Function are the same thing, just called different names in different contexts, with the aim of having third-party code handle actual business after interpreting and understanding user intent in LLM.

Suggestion

If you really want to understand how Assistant works from the ground up, I suggest taking a look at the use of REST, especially since this case is based on Tool+Function and reveals some hidden parts of LangChain and the SDK.

Use Case

The case itself is very simple, enter a piece of text (any), translate it into German, and then print it.

- Result text in German

- The final state of “Run”, the JSON result is used for debugging, it should have reached the “completed”.

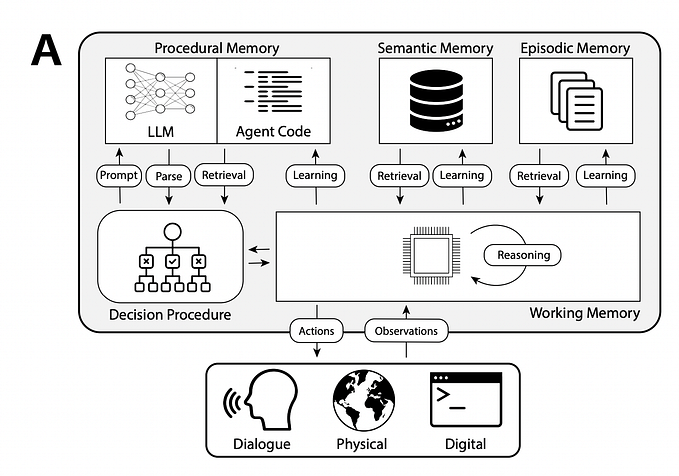

Agent, Tool and Function

Here we need to recall the concepts of Agent, Tool, and Function (as mentioned at the beginning of the article, Assistants are Agents hosted by OpenAI).

We can think of an Agent as an “AI smart scheduler,” and this “scheduler” can “intelligently” schedule different Tools or Functions to use in executing 3rd party codes to accomplish the task (sub-tasks) that the user has prompted via Message.

The “scheduler” takes the user’s input prompt and then gives a suitable result based on the prompt’s requirements. The prompt (or let’s say task) may be very comprehensive in content, and in order to solve each individual “sub-tasks” to achieve the ultimate goal, the Tool or Function will be “intelligently” indicated by the LLM or OpenAI.

Here’s important: OpenAI or LLM does not run code to process “subtasks”; they just return which Tool or Function will be used. The client codes or 3rd party codes know where the functions are to execute.

When we define Tools or Functions for Assistants, we provide clear descriptions, and the “scheduling” behind Agents and LLM is based on comprehensive descriptions.

- We give prompt (Message) to the Assistant.

- A Thread is created, and the Message will be submitted into it.

You’ll find that the create of a Thread doesn’t need the assistant-id. - A Run can commence once the Message has been submitted.

- The Run updates its status automatically.

- The Run status of the of the required_action indicates that we should call a Tool or Function. There’s sufficient information in the feedback when the status of the required_action appears.

- Find the local function or class that is indicated by the Tool.

- Execute the function and get the result.

- Call the submit_too_outputs API with the result on the Assistant associated with the Thread and the Run instantly.

- Back to step 4 for the next Tool is required to run.

- All Tools have been executed.

- The Run reaches “completed”.

- End of an Assistant process

Notice: The Run’s status can be obtained using the Thread-id and Run-id, even though the SDK offers the retrieval method. What the SDK conceals is simply a few API REST calls.

Once again: A Run can only commence after initiating a message.

Here’s another example of a LangChain Agent,This example uses Agent to automatically annotate meaningful objects in the image.

Back to our case in this article, we have defined two Tools or Functions to execute:

- Translate any string into German using this tool.

- Print tool for the German result.

The AI (OpenAI, LLM, Agent) knows when to call the translate-tool and instructs the client code to execute the corresponding local function (a translator class). After submitting the output, the AI guides the client again to use the next tool, which is for printing.

Here is the main code:

key: OpenAI key

inputs: It is the prompt, telling AI what we are going to do.

instructions: It’s a ‘system’ message.

model_name: ie: gpt-4–1106-preview

Here a string will be returned as result as well as a debug JSONdef _do_in_rest(

self,

key: str,

inputs: str,

instructions: str,

model_name: str,

) -> tuple[str, str] | None:

translator_def = """"function": {

"name": "translate_to_de_string",

"description": "Use this function to translate a string into German",

"parameters": {

"type": "object",

"properties": {

"string": {

"type": "string",

"description": "Any string input by user to translate"

}

},

"required": ["string"]

}

}"""

print_func_def = """"function": {

"name": "print_string",

"description": "Use this function to print any string performed by translate_to_de_string",

"parameters": {

"type": "object",

"properties": {

"string": {

"type": "string",

"description": "Any string input by user to print"

}

},

"required": ["string"]

}

}"""

assis_create = (

"{\n"

' "name": "Print Assistant",\n'

' "model": "' + model_name + '",\n'

' "instructions": "' + instructions + '",\n'

' "tools": [\n'

" {\n"

' "type": "function",\n'

f" {translator_def} \n"

" },\n"

" {\n"

' "type": "function",\n'

f" {print_func_def} \n"

" }\n"

" ]\n"

"}"

)

assis_res = self._post(

key,

"api.openai.com",

"/v1/assistants",

assis_create,

)

logger.debug(assis_res)

assis_id = assis_res["id"]

thread_create = ""

thread_res = self._post(

key,

"api.openai.com",

"/v1/threads",

thread_create,

)

logger.debug(thread_res)

thread_id = thread_res["id"]

content = '"' + inputs.strip() + '"'

message_create = '{"role": "user", "content":' + content + "}"

message_res = self._post(

key,

"api.openai.com",

f"/v1/threads/{thread_id}/messages",

message_create,

)

logger.debug(message_res)

# Warning, message must be created before a "run" is activated.

run_create = '{"assistant_id": "' + assis_id + '"}'

run_res = self._post(

key,

"api.openai.com",

f"/v1/threads/{thread_id}/runs",

run_create,

)

logger.debug(run_res)

run_id = run_res["id"]

run_status = run_res["status"]

result = None

while True:

logger.debug("🏃♂️ Run status: " + run_status)

run_res = self._get(

key,

"api.openai.com",

f"/v1/threads/{thread_id}/runs/{run_id}",

)

run_status = run_res["status"]

if run_status == "completed":

break

elif run_status == "requires_action":

logger.debug("🏃♂️ Run status: " + run_status)

required_action = run_res["required_action"]

required_action_type = required_action["type"]

if required_action_type != "submit_tool_outputs":

continue

submit_tool_outputs = required_action["submit_tool_outputs"]

tool_calls = submit_tool_outputs["tool_calls"]

tool_call_func = tool_calls[0]["function"]

func_name = tool_call_func["name"]

arguments = tool_call_func["arguments"]

result = json.loads(arguments)["string"]

tool_call_id = tool_calls[0]["id"]

logger.debug(f"Tool call id: {tool_call_id}")

if func_name == "translate_to_de_string":

de_translator = DeTranslator()

result = de_translator(result)

elif func_name == "print_string":

# We don't need to really print, just assign the

# result and return to outside.

result = f"{result}"

submit_tool_output = (

'{"tool_call_id":'

+ '"'

+ tool_call_id

+ '",'

+ '"output":'

+ '"'

+ result

+ '"}'

)

submit_tool_outputs: str = (

'{"tool_outputs":[' + submit_tool_output + "]}"

)

# Important, otherwise there is server-error 400 while POST.

submit_tool_outputs = submit_tool_outputs.encode()

logger.debug(submit_tool_outputs)

submit_res = self._post(

key,

"api.openai.com",

f"/v1/threads/{thread_id}/runs/{run_id}/submit_tool_outputs",

submit_tool_outputs,

)

logger.debug(submit_res)

return result, run_res

Summary

Notice

OpenAI offers the backend in the cloud for storing all created assistants and uploaded files:

https://platform.openai.com/assistants and https://platform.openai.com/files

My Comment

I recommend using LangChain as the default approach because it is simple and straightforward, same as Chat or Completions, however, most LLM-based applications do not use REST API, so the SDK approach can be used to some extent. It’s important to remember that the “run” of the assistant is asynchronous, which means that the status of it should be undetermined until a “completed” is reached.

The main advantage of using the Assistants API is that we can avoid dealing with the intricate details of RAG applications, such as embedding DB access, document/file splitting, and so on. The API manages these tasks for us, and all we have to do is take care of the status of Run.

Full code (streamlit)

Thank you for reading.